The skull cap is thick and flat. It looks distinctively human, and yet its massive brow ridge, hanging over the eyes like a boney pair of googles, is impossible to ignore. In 1857, an anatomist named Hermann Schaafhausen stared at the skull cap in his laboratory at the University of Bonn and tried to make sense of it. Quarry workers had found it the year before in a cave in a valley called Neander. A schoolteacher had saved the skull cap, along with a few other bones, from destruction and brought it to Schaafhausen to examine. And now Schaafhausen had to make the call. Was it human? Or was it some human-like ape?

Schaafhausen did not have much help to fall back on. At the time, archaeologists had only found faint hints that humans had coexisted with fossil animals, such as spears buried in caves near the bones of hyenas. Charles Darwin was still two years away from publishing the

Origin of Species and providing a theory to make sense of human evolution. Naturalists tended to look at humanity as a collection of races arranged in a rank from savagery to civilization. The most savage races barely ranked above apes, while the naturalists themselves, of course, belonged to the race at the top of the ladder. When anatomists looked at human bodies, they found what they thought was a validation of this hierarchy: differences in the size of skulls, the slopes of brows, the width of noses. Yet all their attempts to neatly sort humanity were bedeviled by the tremendous variation in our species. Within a single so-called race, people varied in color, height, facial features–even in their brow ridges. Schaafhausen knew, for example, about a skull dug up from an ancient grave in Germany that “resembled that of a Negro,” as he later wrote.

To make sense of the “Neanderthal cranium,” as he called it, Schaafhausen tried to fit it into this confusing landscape of human variation. As peculiar as the bone was, he decided it must belong to a human. It was very much unlike the cranium of living Europeans, but Schaafhausen speculated that it belonged to an ancient forerunner. Yet for naturalists of Schaafhausen’s age, such a heavy brow ridge implied not the advanced refinement of European civilization, but wild savagery. Well, Schaafhausen thought, Europeans were pretty savage back in the day. “Even of the Germans,” Schaafhausen wrote in his report on the Neanderthal cranium, “Caesar remarks that the Roman soldiers were unable to withstand their aspect and the flashing of their eyes, and that a sudden panic seized his army.” Schaafhausen found many other passages in classical history that suggested to him a pracitically monstrous past for Europe. “The Irish were voracious cannibals, and considered it praiseworthy to eat the bodies of their parents,” he wrote. Even in the 1200s, ancient tribes in Scandinavia still lived in the mountains and forests, wearing animal skins, “uttering sounds more like the cries of wild beasts than human speech.”

Surely this heavy-browed Neanderthal would have fit right in.

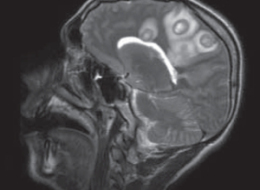

Some 150 years later, pieces of that original Neanderthal cranium now sit in another laboratory in Liepzig, just 230 miles away from Schaafhausen’s lab. Instead of calipers, it is filled with a different set of measuring tools: ones that can read out sequences of DNA that have been hiding in Neanderthal fossils for 50,000 years or more. And today a team of scientists based at the Max Planck Institute of Evolutionary Anthropology published a rough draft of the entire Neanderthal genome.

It is an historic day, but it reminds us, once again, that the publication of a genome does not automatically answer all the questions scientists have about the organism to whom the genome belongs. In fact, a careful look at the new report is a humbling experience. We gaze at the Neanderthal genome today as Schaafhausen gazed at the Neanderthal skull cap that first introduced us to these ambiguous humans.

Since Schaafhausen’s day, paleoanthropologists have discovered Neanderthals across a huge range stretching from Spain to Israel to Siberia. Their fossils range from about 400,000 years ago to about 28,000 years ago. Instead of a lone skull cap, scientists now have just about every bone from its skeleton. Neanderthals were stocky and strong, with a brain about the size of our own. The isotopes in their bones suggest a diet rich in meat, and their fractured bones suggest a rough time getting that food. There’s no evidence that Neanderthals could paint spectacular images of rhinos and deer on cave walls like humans did. But they still left behind many traces of very sophisticated behavior, from intricate tools to painted jewelry.

Ideas about our own kinship to Neanderthals have swung dramatically over the years. For many decades after their initial discovery, paleoanthropologists only found Neanderthal bones in Europe. Many researchers decided, like Schaafhausen, that Neanderthals were the ancestors of living Europeans. But they were also part of a much larger lineage of humans that spanned the Old World. Their peculiar features, like the heavy brow, were just a local variation. Over the past million years, the linked populations of humans in Africa, Europe, and Asia all evolved together into modern humans.

In the 1980s, a different view emerged. All living humans could trace their ancestry to a small population in Africa perhaps 150,000 years ago. They spread out across all of Africa, and then moved into Europe and Asia about 50,000 years ago. If they encountered other hominins in their way, such as the Neanderthals, they did not interbreed. Eventually, only our own species, the African-originating

Homo sapiens, was left.

The evidence scientists marshalled for this “Out of Africa” view of human evolution took the form of both fossils and genes. The stocky, heavy browed Neanderthals did not evolve smoothly into slender, flat-faced Europeans, scientists argued. Instead, modern-looking Europeans just popped up about 40,000 years ago. What’s more, they argued, those modern-looking Europeans resembled older humans from Africa.

At the time, geneticists were learning how to sequence genes and compare different versions of the same genes among individuals. Some of the first genes that scientists sequenced were in the mitochondria, little blobs in our cells that generate energy. Mitochondria also carry DNA, and they have the added attraction of being passed down only from mothers to their children. The mitochondrial DNA of Europeans was much closer to that of Asians than either was to Africans. What’s more, the diversity of mitochondrial DNA among Africans was huge compared to the rest of the world. These sorts of results suggested that living humans shared a common ancestor in Africa. And the amount of mutations in each branch of the human tree suggested that that common ancestor lived about 150,000 years ago, not a million years ago.

Over the past 30 years, scientists have battled over which of these views–multi-regionalism versus Out of Africa–is right. And along the way, they’ve also developed more complex variations that fall in between the two extremes. Some have suggested, for example, that modern humans emerged out of Africa in a series of waves. Some have suggested that modern humans and other hominins interbred, leaving us with a mix of genetic material.

Reconstructing this history is important for many reasons, not the least of which is that scientists can use it to plot out the rise of the human mind. If Neanderthals could make their own jewelry 50,000 years ago, for example, they might well have had brains capable of recognizing themselves as both individuals and as members of a group. Humans are the only living animals with that package of cognitive skills. Perhaps that package had already evolved in the common ancestor of humans and Neanderthals. Or perhaps it evolved independently in both lineages.

In the 1990s, the German geneticist Svanta Paabo led a team of scientists in search of a new kind of evidence to test these ideas: ancient DNA. They were able to extract bits of DNA from bones that were found along with Schaafhausen’s skull cap in the Neander valley cave. Despite being 42,000 years old, the fossils still retained some genetic material. But reading that DNA proved to be a collossal challenge. Over thousands of years, DNA breaks into tiny pieces, and some of the individual “letters” (or nucleotides) in the Neanderthal genes become damaged, effectively turning parts of its genome into gibberish. It’s also hard to isolate Neanderthal DNA from the far more abundant DNA of microbes that live in the fossils today. And the scientists themselves can contaminate the samples with their own DNA as well.

Over the years, Paabo and his colleagues have found ways to overcome a lot of these problems. They’ve also taken advantage of the awesome leaps that genome-sequencing technology has taken since they started the project. They have been able to reconstruct bigger and bigger stretches of DNA. They’ve been able to fish them out of a number of Neanderthal fossils from many parts of the Old World. And today they can offer us a rough picture of all the DNA it takes to be a Neanderthal.

To create a rough draft of the Neanderthal genome, the scientists gathered DNA from the fossils of individual Neanderthals that lived in Croatia about 40,000 years ago. The scientists sequenced fragments of DNA totalling more than 4 billion nucleotides. To figure out what spot on which chromosome each fragment belonged, they lined up the Neanderthal DNA against the genomes of humans and chimpanzees. They are far from having a precise read on all 3 billion nucleotides in the Neanderthal genome. But they were able to zero in on many regions of the rough draft and get a much finer picture of interesting genes.

One of the big questions the scientists wanted to tackle was how those interesting genes evolved over the past six million years, since our ancestors split off from the ancestors of chimpanzees. So they compared the Neanderthal genome to the genome of chimpanzees, as well as to humans from different regions of the world, including Africa, Europe, Asia, and New Guinea.

This comparison is tricky because human DNA, like human skulls, is loaded with variations. The DNA of any two people can differ at millions of spots. Those differences may consist of as little a single nucleotide, or a long stretch of duplicated DNA. Each of us picks up a few dozen new mutations when we’re born, but most of the variations in our genome have been circulating in our species for centuries, millennia, and, in some cases, hundreds of thousands of years. Over the course of history these variants have gotten mixed and matched in different human populations. Some of them vary from continent to continent. It’s possible to tell someone from Nigeria from someone from China based on just a couple hundred genetic markers. But a lot of the same variations that Chinese people have also exist in Nigeria. That’s because Chinese people and Nigerians descend from an ancestral population. The gene variants first arose in that ancestral variation and then were all passed down from generation to generation, even as humans migrated and diverged across the planet. And when Paabo and his colleagues looked at the Neanderthal genome, they discovered that Neanderthals carried some of the same variants in their genome too.

The scientists compared the variants in the Neanderthal genome to those in humans to figure out when the two kinds of humans diverged. They estimate that the two populations became distinct between 270,000 and 440,000 years ago. After the split, our own ancestors continued to evolve. It’s possible that genes that evolved after that split helped to make us uniquely human. To identify some of those genes, Paabo and his colleagues looked for genes that were identical in Neaderthals and chimpanzees, but had undergone a significant change in humans.

They didn’t find many. In one search, they looked for protein-coding genes. Genes give cells instructions for how to assemble amino acids into proteins. Some mutations don’t change the final recipe for a protein, while some do. Paabo and his colleagues found that just 78 human genes have evolved to make a new kind of protein, differing from the ancestral form by one or more amino acids. (We have, bear in mind, 20,000 protein-coding genes.) Only five genes have more than one altered amino acid.

The scientists also found some potentially important changes in stretched of human DNA that doesn’t encode genes. Some of these non-coding stretches act as switches for neighboring genes. Others encode tiny pieces of single-stranded versions of DNA, called microRNAs. MicroRNAs can act as volume knobs for other genes, boosting or squelching the proteins they make.

Another way to look for uniquely human DNA is to search for stretches of genetic material that still retain the fingerprint of natural selection. In the case of many genes, several variants of the same gene have coexisted for hundreds of thousands of years. Some variants found in living humans also turn up in the Neanderthal genome. But there are some cases in which natural selection has strongly favored humans with one variant of a gene over others. The selection has been so strong sometimes that all the other variants have vanished. Today, living humans all share one successful variant, while the Neanderthal genome contains one that no longer exists in our species. The scientists discovered 212 regions of the human genome that have experienced this so-called “selective sweep.”

You can see the full list of all these promising pieces of DNA in the paper Paabo and his colleagues published today. If you’re looking for a revelation of what it means to be human, be prepared to be disappointed by a dreary catalog of sterile names like RPTN and GREB1 and OR1K1. You may find yourself with a case of

Yet Another Genome Syndrome. In all fairness, the scientists do take a crack at finding meaning in their catalog. They note that a number of evolved genes are active in skin cells. But does that mean that we evolved a new kind of skin color? A new way of sweating? A better ability to heal wounds? At this point, nobody really knows.

If you believe the difference between humans and Neanderthals is primarily in the way we think, then you may be intrigued by the strongly selected genes that have been linked to the brain. These genes got their links to the brain thanks to the mental disorders that they can help produce when they mutate. For exampe, one gene, called AUTS2, gets its name from its link to autism. Another strongly-selected human gene, NRG3, has been linked to schizophrenia. Unfortunately, these disease associations just tell scientists what happens when these genes go awry, not what they do in normal brains.

The most satisfying hypothesis the scientists offer is also the one with the deepest historical resonance. It has to do with the brow ridge that so puzzled Schaafhausen back in 1857. One of the strongly selected genes in humans, known as RUNX2, has been linked to a condition known as cleiodocranial dysplasia. People who suffer from this condition have a bell-shaped rib cage, deformed shoulder bones, and a thick brow ridge. All three traits distinguish Neanderthals from humans.

Paabo and his colleagues then turned to the debate over what happened when humans emerged from Africa. Scientists have debated for years what happened when our ancestors encountered Neanderthals and other extinct hominin populations. Some have argued that they kept their distance and never interbred. Others have scoffed that any human could show such self-restraint. After all, humans have been known to have sex with all sorts of mammals when given the opportunity, so why should they have been so scrupulous about a

very human-like mammal?

The evidence that scientists have gathered up till now has been very confusing. If you just look at mitochondria, for example, all the Neanderthal form tiny twigs on a branch that’s distant from the human branch. If Neanderthals and humans had interbred often enough, then some people today might be carrying mitochondrial DNA that was more like that of Neanderthals than like other humans.

On the other hand, some scientists looking at other genes have found what they claim to be evidence of interbreeding. They would find gene variants in living humans that had evolved from an ancestral gene about a million years ago.

One way to explain this pattern was to propose that modern humans interbred with Neanderthals or other hominins. Some of their DNA then entered our gene pool and has survived till today. In one case, a team of scientists proposed that a gene variant called Microcephalin D hopped into our species from Neanderthals and then spread very quickly, driven perhaps by natural selection. Making this hypothesis

even more intriguing was the fact that the gene is involved in building the brain.

Paabo and his colleagues looked for pieces of the Neanderthal genome scattered in the genomes of living humans. The scientists found that on average, the Neanderthal genome is a little more similar to the genomes of people in Europe, China, and New Guinea, than it is to the genomes of people from Africa. After carefully comparing the most similar segments of the genomes, the scientists propose that Neanderthals interbred with the first immigrants out of Africa–perhaps in the Middle East, where the bones of both early humans and Neanderthals have been found.

Today, the people of Europe and Asia have genomes that are 1 to 4 percent Neanderthal.That interbreeding doesn’t seem to have meant much to us, in any biological sense. None of the segments our species picked up from Neanderthals was favored by natural selection. (Microcephalin D turns out to have been nothing special.)

While working on this post, I contacted two experts who have been critical of some earlier studies on hominin interbreeding,

Laurence Excoffier of the University of Bern and

Nick Barton of the University of Edinburgh. Both scientist gave the Neanderthal genome paper high marks and agree in particular that the interbreeding hypothesis is a good one. But they do think some alternative hypotheses have to be tested. For example, interbreeding is not the only way that some living humans might have ended up with Neanderthal-like pieces of DNA. Cast your mind back 500,000 years, before the populations of humans and Neanderthals had diverged. Imagine that those ancestral Africans were not trading genes freely. Instead imagine that some kind of barrier emerged to keep some gene variants in one part of Africa and other variants in another part.

Now imagine that the ancestors of Neanderthals leave Africa, and then much later the ancestors of Europeans and Asians leave Africa. It’s possible that both sets of immigrants came from the same part of Africa. They might have both taken some gene variants with them did not exist in other parts of Africa. Today, some living Africans still lack those variants. This scenario could lead to Europeans and Asians with Neanderthal-like pieces of DNA without a single hybrid baby ever being born.

If humans and Neanderthals did indeed interbreed, Excoffier thinks there’s huge puzzle to be solved. The new paper suggests that genes flowed from Neanderthals to humans only at some point between 50,000 and 80,000 years ago–before Europeans and Asians diverged. Yet we know that humans and Neanderthals coexisted for another 20,000 years in Europe, and probably about as long in Asia. If humans and Neanderthals interbred during that later period, Excoffier argues, the evidence should be sitting in the genomes of Europeans or Asians. The fact that the evidence is not there means that somehow humans really did find the self-restraint not to mate with Neanderthals.

Because interbreeding involves sex, it dominates the headlines about Paabo’s research. But I’m left wondering about the Neanderthals themselves. We now have a rough draft of the operating instructions for a kind of human that has been gone from the planet for 28,000 years, which had its own kind of culture, its own way of making its way through the world. Yet I found very little in the paper about what the Neanderthal genome tells us about their owners. It’s wonderful to use the Neanderthal genome as a tool for subtracting away our ancestral DNA and figure out what makes us uniquely human. But it would also be great to know what made Neanderthals uniquely Neanderthal.

The skull cap is thick and flat. It looks distinctively human, and yet its massive brow ridge, hanging over the eyes like a boney pair of googles, is impossible to ignore. In 1857, an anatomist named Hermann Schaafhausen stared at the skull cap in his laboratory at the University of Bonn and tried to make sense of it. Quarry workers had found it the year before in a cave in a valley called Neander. A schoolteacher had saved the skull cap, along with a few other bones, from destruction and brought it to Schaafhausen to examine. And now Schaafhausen had to make the call. Was it human? Or was it some human-like ape?

The skull cap is thick and flat. It looks distinctively human, and yet its massive brow ridge, hanging over the eyes like a boney pair of googles, is impossible to ignore. In 1857, an anatomist named Hermann Schaafhausen stared at the skull cap in his laboratory at the University of Bonn and tried to make sense of it. Quarry workers had found it the year before in a cave in a valley called Neander. A schoolteacher had saved the skull cap, along with a few other bones, from destruction and brought it to Schaafhausen to examine. And now Schaafhausen had to make the call. Was it human? Or was it some human-like ape?